XYZT Lab

creating a high-fidelity 3D streaming platform from an autonomous robot

During the spring and fall 2021 semesters, I performed undergraduate research with the XYZT Lab at Purdue. I was advised by Professor Zhang and worked closely with one of his PhD students, Yi-Hong Liao.

The lab develops advanced vision and perception technology, which is applicable to challenges in autonomous robotics. I worked on a team with three other undergraduate students to integrate their technology onto a mobile robot.

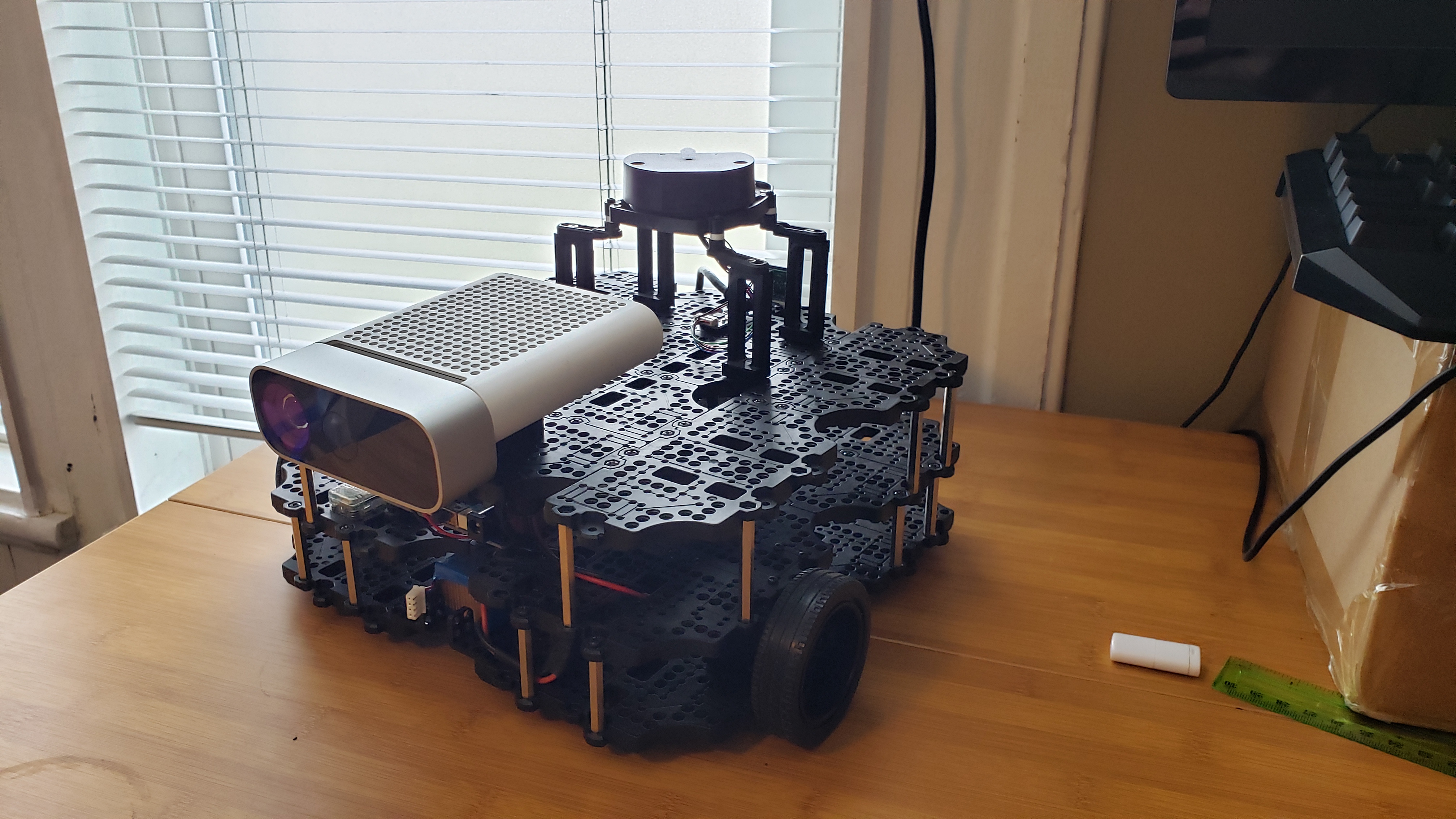

The project focused on building a system capable of reacting to simple voice commands in an unknown environment, while streaming 3D perception information to remote operators. The base platform is a Turtlebot 3 Waffle Pi, and it has been augmented with a Kinect Azure 3D sensor. The sensor includes a microphone array capable of sound source localization.

My specific work included creating functional demonstrations of:

- waypoint navigation using the Turtlebot stack

- sound source localization / tracking using Odas

- offline speech-to-text recognition using PocketSphinx

- integration of all the above

- 3D perception streaming through RobotWebTools

I also developed Docker images and documentation for running the code I developed, and I advised other individuals in the lab on ROS best practices.

At the final demonstration, the system was capable of recognizing a request to “come here”, determining the direction of the speaker, and navigating in the direction of the request. Future work will include more robust integration of the costmap data to the sound source localization, and continued algorithm tuning.

Potential applications include crisis response and search and rescue. The technology could be used to generate detailed 3D maps while the mobile robot follows an untrained individual’s voice. These maps can then assist first responders by providing them with enhanced situational awareness.

Some of my work is publicly available on the project’s GitHub repository. We anticipate publishing a paper and creating a press release through the Purdue ME department in the spring.